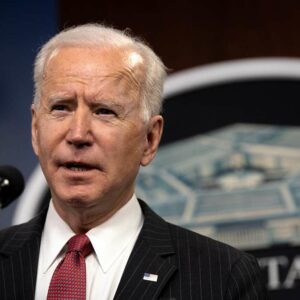

President Biden’s executive order (EO) on AI regulation, issued in October, offers a thoughtful vision for how to govern the technology but falls short on key enforcement measures. The lack of enforcement is a dangerous mistake, because AI poses risk across all aspects of life: privacy, government intrusion, bias and discrimination, plagiarism, protection of intellectual property, and the survival of humanity itself.

As the EO recognizes, even an apparently benign AI system can still exacerbate these risks. The most advanced AI models are inherently “dual use,” because they are powerful enough to have a wide variety of applications. An AI intended to design new medications could also be used to design bioweapons; an AI intended to help build new websites could also be used to launch automated cyberattacks.

To guard against these risks, the EO requires AI developers to report on the results of red-teaming exercises. Red-teaming is a process whereby experts attempt to exploit potential vulnerabilities in a system. This process is critical to building safe AI systems because it helps identify potential risks. The executive order appropriately turns to the National Institute of Standards and Technology (NIST) to develop standards for these exercises. This is an excellent choice, because NIST is well-known for its ability to foster a collaborative approach between the private sector and the government on highly technical issues. For example, NIST’s cybersecurity standards were drafted with detailed input from private companies, and today are used to effectively protect federal agencies against cyberthreats through the Federal Risk and Authorization Management Program (FedRAMP), which standardizes monitoring of cloud services.

A NIST-supported effort to promote red-teaming would represent a critical step toward safer AI…but only if companies participate in it. Oddly, the EO does not require companies to actually conduct any red-teaming exercises for high-risk models; instead, all they have to do is report the results of any exercises that they do choose to run.

Similarly, companies must report on safety measures adopted in response to the risks uncovered during testing, but there are no minimum standards for these safety measures. The EO leaves it to each developer to determine if the safety measures implemented for their own high-risk systems are adequate.

Additionally, the order requires cloud providers who sell computing time overseas to report on the electronic power they are exporting. However, the Biden framework offers no mechanism to block such sales. Falcon 180B, one of the most powerful large language models, was trained by the United Arab Emirates using compute rented from Amazon Web Services. There is no law preventing the next such model from being built using computers rented by China or Iran.

The order’s tepid enforcement mechanisms are echoed by its easygoing, unhurried approach to developing a regulatory agenda. Instead of ordering federal agencies to take urgent action, the EO directs them to “consider initiating a rulemaking.” State Department officials are not required to help us attract more technical talent from overseas – all they must do is consider doing so. The Commerce Department is not actually required to take action to rein in deepfake videos; rather they must produce a survey of the tools available to assist with such efforts. Decisive action that would grant new rights or impose new responsibilities for AI developers is largely missing.

This leisurely pace might be appropriate if the administration were considering the perils of AI for the first time, but AI is no longer a new issue for the federal government: the Obama Administration released a major report back in 2016 on the perils of AI. The Trump Administration issued an order in 2020 directing all agencies to develop a plan to regulate their AI systems. The technology is advancing too rapidly for us to spend another four years writing advisory reports. We’ve spent enough time exploring the dangers of advanced AI: now we need to address those dangers.

We know this is possible, because there’s one topic where the Biden Administration did flex its federal government muscle: biosecurity. The executive order directs the Office of Science and Technology Policy to develop standards to prevent untrustworthy buyers from purchasing synthetic nucleic acids. Crucially, the order is backed up by a threat to cut federal funding. Once the standards are ready, any biology lab that does not follow the government’s procurement standards will lose access to all life sciences funding.

The danger of a totally unregulated market in DNA is limited; most terrorists and pranksters do not know enough biology to intelligently place an order for a truly dangerous string of DNA. However, as AI continues to grow more capable, it will learn to provide expert advice on biology, which means that we must cut off access to such DNA at the source. If implemented well, Biden’s order could do just that; federal funding for life sciences is so pervasive in the biotech sector that few or no major companies will be willing to risk doing without it. In the months to come, the Biden Administration should develop a similarly effective approach to the other risks posed by advanced AI.

President Biden’s EO shines a spotlight on the urgent need for governance in this rapidly evolving field, yet it stops short of delivering the robust regulatory framework that the current landscape demands. The order acknowledges the risks AI poses – from privacy concerns to existential threats – yet its provisions lack the enforcive vigor necessary to effectively mitigate these dangers. This is tragic: the stakes are high, and the window for meaningful intervention is narrow.